As autonomous driving research advances rapidly, developers and researchers need manageable, accessible ways to experiment with the core technologies behind self-driving vehicles, from vehicle dynamics modeling to state estimation, error state estimation and control. That’s precisely the goal of this project which itself contains a modular parts designed to introduce and illustrate the fundamental components of self-driving systems.

Introduction

This project bundles multiple focused modules that together cover essential components of an autonomous driving stack. Some of the main modules include:

Vehicle Modeling: Simulate basic vehicle dynamics (lateral, longitudinal) under various conditions.

State Estimation: Allows state prediction from motion models (e.g. inertial data) and correction via sensor observations (GNSS, LIDAR), with a simple fusion pipeline.

Control: Implement controllers for lateral (steering, cross-track / heading), and longitudinal (speed, throttle & brake) behavior.

Standalone Simulation & Integration: Putting modeling, estimation, and control together in a minimal simulation environment, the Carla simulator, enabling you to test how different parts interact.

Vehicle Modeling Module

Accurate vehicle modeling is the backbone of any autonomous driving system. Before a controller can decide how a vehicle should move, it must understand how the vehicle responds to forces and steering inputs. Longitudinal and lateral vehicle models, are the two important models capturing different aspects of vehicle motion.

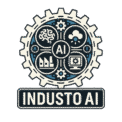

The longitudinal model describes how the vehicle accelerates or slows down based on engine force, braking force, aerodynamic drag, rolling resistance, and road inclination. This model follows a classical physics formulation, expressing how these forces combine to produce forward acceleration or deceleration.

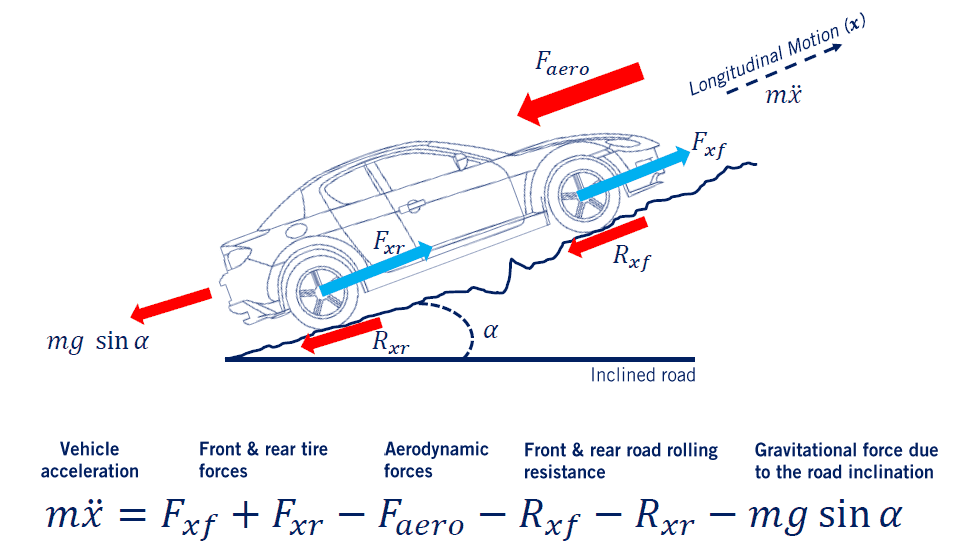

Complementing this is the lateral model, which captures how the vehicle moves sideways and rotates around its vertical axis. This behavior depends on the steering angle, vehicle speed, and tire dynamics. The model uses a simplified bicycle representation that shows how steering inputs generate lateral forces and yaw motion. Understanding this relationship is essential for trajectory tracking, lane keeping, and path following, where the vehicle must accurately orient itself along a desired route.

Position Estimation

Once vehicle motion can be simulated, the next challenge is estimating the vehicle’s true position and orientation. Our models this using two complementary components: an IMU-based motion model that predicts state at high frequency but accumulates drift, and a position-observation module that simulates slower, more accurate sensors like GNSS or LIDAR. These are fused using a Kalman filter, which blends rapid predictions with reliable corrections to produce a stable, accurate estimate of the vehicle’s pose. This demonstrates how real autonomous systems maintain robust localization even when individual sensors are noisy or imperfect.

Error State Estimation

Error estimation focuses on identifying and correcting the small deviations that build up between a system’s predicted state and its true state over time. Instead of re-estimating the full state directly, the method tracks only the errors such as slight drifts in position, velocity, or orientation that arise due to sensor noise, modeling simplifications, or numerical integration. These error terms are then updated using available sensor measurements, and the corrected errors are applied back to the main state estimate. This approach makes the estimation process more stable and efficient, allowing the system to maintain accurate localization even when individual sensors are imperfect or noisy.

Control Integration and Carla-Based Simulation

The controlling module converts the vehicle’s estimated state into steering, acceleration, and braking commands that guide it along a desired path. By regulating both direction and speed, it ensures smooth and stable motion. These control outputs are integrated into the Carla simulator, enabling realistic visualization and testing of how high-level decisions translate into real-time driving behavior.

Dive Into the Code Behind the System

All modules in this project are fully open source and available on GitHub, allowing anyone to explore the implementation details, run the simulations, or build upon the framework. The repository provides clear, modular code for vehicle modeling, estimation, control, and Carla integration, making it a practical resource for learning, experimentation, and further research in autonomous driving.